data parsing and validation¶

In this section, I'd like to explore the various types of data restructuring and formatting approaches such as parsing, validation, and transformation python features that include how to optimize, serialize, and transform them into versatile and interoperable data objects like dictionaries, lists, tuples, sets, or arrays to name a few. I'll do my best to dive deep into these data structures by leveraging some of the really cool python libraries!

functional programming using functional tools¶

Functional tools are essentially the fundamental components of functional programming, that leverages these built-in functions. What's really neat about them is that these functions not only optimize how you passed in iterables with time-complexity efficiency and memory, but also fewer lines of code to write!

map¶

We can use map() to iterate and transform all the elements in the iterable without using the for loop. map() is also written in C, so it's highly optimized. With a for loop, the entire list is in your system memory, while the map() outputs each item based on demand during execution. So instead of an entire list living the local system's memory, only one item is dumped into your memory.

syntax

uses cases¶

numbers = [2, 3, 8, 2]

def map_numbers(numbers):

return numbers**3

triple = list(map(map_numbers, numbers))

>> [8, 27, 512, 8]

Another common use case is using lambda in map() function.

countries = ["iceland", "poland", "china", "switzerland"]

numbers = [2, 3, 8, 2]

text = "we go running today! trailrunning is fun"

def sort_countries(countries):

return sorted(countries, reverse=True)

def multiply_countries(numbers):

return [n**3 for n in numbers]

def map_numbers(numbers):

"""Use map() to demonstrate the tool."""

return numbers**3

triple = list(map(map_numbers, numbers))

def get_countries(countries):

"""return -> <function get_countries.<locals>.<lambda> at 0x7fd213c8a3b0>"""

return lambda countries: countries.upper()

def __str__(countries):

"""return -> string test ['iceland', 'poland', 'china', 'switzerland']"""

return f"string test {countries}"

def __repr__(countries):

"""return -> repr test ['iceland', 'poland', 'china', 'switzerland']"""

return f"repr test {countries}"

def __str__(numbers):

return f"{numbers}"

def create_uppercase_str(text):

"""This function is used as an arg for < map(arg, iterable)> built-in function.

return ->

let's map this!

WE GO RUNNING TODAY! TRAILRUNNING IS FUN

"""

return text.upper()

# assign new var for map(arg, iterable)

map_str_uppercase = map(create_uppercase_str, text)

print("let's map this!".format(create_uppercase_str), end="-> ")

if __name__ == "__main__":

# print(sort_countries(countries))

# countries = list(map(len, countries))

# print(f"countries are: \n{countries}")

# print(multiply_countries(numbers))

# print(map_countries(countries))

# print(triple)

# print(map_with_lambda(countries))

# print(get_countries(countries))

# print(__str__(countries))

# print(__repr__(countries))

# print(map_numbers(numbers))

# print(triple)

print(create_uppercase_str(text))

reduce¶

filter¶

Without being said, filter() is literally to filter an item in an iterable to generate a new iterable.

json¶

Working with JSON can be painful sometimes, particularly when the object you're trying to serialize is really massive or contains many nested objects within it and trying to untangle them into flat objects is a challenge. As a data engineer, I'v come acrossed those pain points in the past and have used many different Python libraries to parse JSON data sets and the usual standard one is json package. The other ones are ujson, and orjson, which I won't go into details, but you can read up on those links here. Essentially orjson supports dataclasses, datetimes, and numpy pretty well; and ujson allows quick conversion between Python objects and JSON data formats; if that's what you're specifically targeting at over speed, type hint or schema validation features then those are the JSON libraries you may want to check it out as well or do a quick comparison and see which fits what you're trying to do.

json util¶

Data format conversion from csv to json would typically only makes sense when you're dealing with large volume of data that needs to scale for more complex data manipulation or transformation projects like a data science project that involves complex visualization tasks, while requiring those viz to be optimally performant during high I/O usages. And as you go along, you might need to revise some large data content to adjust to what you're trying to visualize or manipulate as a result for better readability and interopabiity. This makes JSON very versatile to operate as it's heterogenously friendly across various platforms and languages, because it's a universal data format. Lastly, it's much easier to devise different data structures like a hashmap or an array to improve the I/O and performance of read/write.

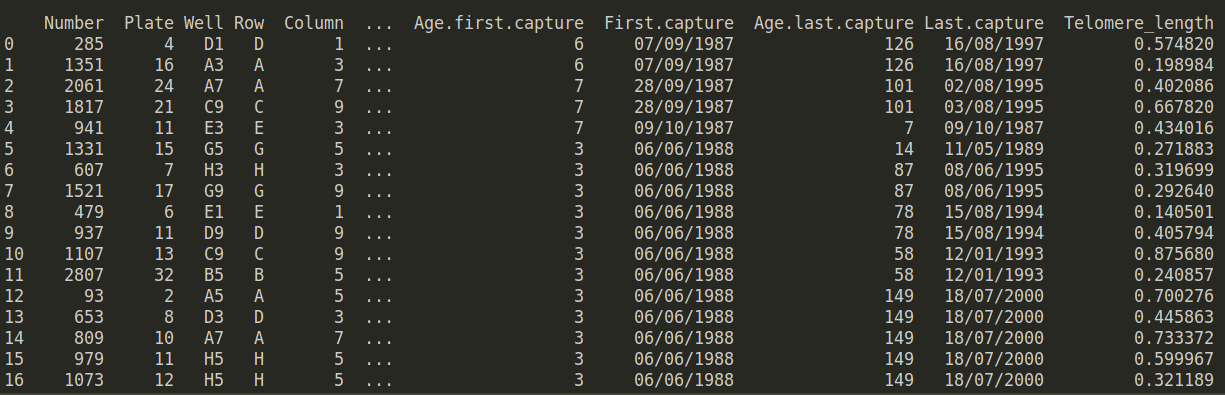

Let's dive into a sample yet small csv dataset where n = 1248 with 33 observations; and for the sake of this demo, my focus is to showcase snippets of json optimization and manipulation approaches you can take!

Here is the data source that I used that you can further tinker with if you so inspired to! :)

This code should run like a charm! You can test it yourself by copying the code directly and execute, once you downloaded the data source linked above. Let me know if you find some other cool ways to serialize and optimize JSON format.

I'll continuing extending this codebase here, so check back soon!

import os

import pandas as pd

from pprint import pprint

# TODO: add map, lambda, filter, zip, enumerate parsing features to this code!

class JsonParse:

"""Object to showcase JSON parsing functionalties."""

def __init__(self):

"""Initialize class instances."""

os.chdir("../../docs/data")

self.fruit = ["tangerine", "dragonfruit", "pineapple", "kiwi"]

def convert_csv_to_json(self, csv):

"""Convert raw data to json"""

# specify nrows=30 to preview data observations

dfjs = pd.read_csv(filepath_or_buffer=csv, header=0)

dfjs.to_json("datatest.json")

def read_json_to_df(self, data):

"""Load json into dataframe."""

self.df = pd.read_json(data)

pd.DataFrame(self.df)

# to_string prints entire dataframe

# print(f"loading json to a dataframe: \n{rdj.to_string()}")

return self.df

def view_attributes(self):

"""Data preview."""

print(

f"\nload simple data attributes: \n{self.df.head(), self.df.info(), self.df.shape}"

)

def extract_subset(self):

"""Iteratively chunkify dataset into subsets by slicing a large dataframe into n rows."""

chunks = self.df

n = 500

# chunkifying data into multiple dataframes using list comprehension

chunk_dfs = [chunks[i : i + n] for i in range(0, len(chunks), n)]

print(f"first chunk: \n{chunk_dfs[0]}")

print(f"second chunk: \n{chunk_dfs[1]}")

print(f"third chunk: \n{chunk_dfs[2]}")

def group_subset(self):

"""Group dataframe based on a specified column."""

self.df.groupby(self.df.Plate)

print(f"group by Plate: \n{self.df}")

def convert_df_to_dict(self):

"""Converting dataframe into different dictionary structures by passing a param "orient",

tranposing it, or converting it into a list.

"orient" is a useful param that lets you dictate the orientation of your output.

More tips here -> https://pandas.pydata.org/docs/reference/api/pandas.DataFrame.to_dict.html

return -> <zipdict>

{'AA_Spring': [0.432042246, 'Female', 5], 'Summer': [0.49707565900000006, 'Female', 7],

'Autumn': [0.276302249, 'Female', 11],

'Winter': [0.49791895700000005, 'Male', 1]}

return -> <regdict>

{'Number': {0: 285, 1: 1351...},

'Well': {0: 'D1', 1...},...}}

"""

# method 1 - regular dictionary - {k:v}

self.regdict = self.df.to_dict("dict")

# method 2 - use zip() to transpose your dictionary - {i: [x, y, z]}

# where "i" is the key and "x, y, z" are the values in the list

zipdict = dict(

[

(i, [x, y, z])

for i, x, y, z in zip(

self.df.Season, self.df.Telomere_length, self.df.Sex, self.df.Month

)

]

)

print(f"zip dictionary is \n{zipdict}")

def extract_subset_dict(self):

"""Fetch a subset of dictionary based on some dict keys."""

subset_keys = ("Maternal.age", "Age.first.capture", "Telomere_length")

subset = {x: self.regdict[x] for x in subset_keys if x in self.regdict}

print(f"subset of a regular dictionary is \n{subset}")

def normalizing_nested_json(self):

"""Unnesting JSON and flatten it using json_normalize() method."""

normjson = pd.json_normalize(self.regdict)

# normjson.to_dict("records")

pprint(f"\n normalized nested json \n{normjson}")

def use_lambda(self, fruit):

"""Use lambda function to sort fruits"""

result = map(lambda fruit: fruit.title(), fruit())

output = [f for f in result]

for f in output:

print(output, end="")

# print(f"{output}, end = ' ' ")

def main():

script = DataParse()

# script.convert_csv_to_json("data3_Data_heritability.csv")

# script.read_json_to_df("data3.json")

# script.view_attributes()

# script.extract_subset()

# script.group_subset()

# script.convert_df_to_dict()

# script.extract_subset_dict()

# script.normalizing_nested_json()

script.use_lambda(script.fruit)

if __name__ == "__main__":

main()

msgspec¶

One interesting package I've recently discovered that can help speed up the serialization and schematic evolution and validation capabilities is msgspec. It's integrated with type annotations. It supports both JSON and MessagePack, which is a faster alternative format to standard JSON modules. If you’re parsing JSON files on a regular basis, and you’re hitting performance or memory issues, consider checking out this out!

- It's fast and friendly

- Encode messages as JSON or MessagePack

- Decode messages back into Python types

- It allows you to define schemas for the records you’re parsing

- It lets you define the fields you only care about

- Better validation messages - it lets you prescribed error type hint messages than just a 500 error message

One point I want to raise is msgspec also supports schema evolution and validation I find this super useful, especially when data is constantly evolving, they're nice features to have when your client data like an API suddenly decides to make changes to one of its params that you're unaware of, or it evolves, msgspec will automatically adjust to those changes between clients in your schema within your systems in the server end. And if one of those records has a missing a field within those changes made, or value has a wrong data type, like an int instead of a str, this parser will yell at ya to check it out before the parser continues processing! With standard JSON libraries, schema validation has to happen separately.

install¶

usage from doc¶

from typing import Optional, Set

import msgspec

class User(msgspec.Struct):

"""A new type describing a User"""

name: str

groups: Set[str] = set()

email: Optional[str] = None

Encode messages as JSON or MessagePack.

>> alice = User("alice", groups={"admin", "engineering"})

>> alice

# User(name='alice', groups={"admin", "engineering"}, email=None)

>> msg = msgspec.json.encode(alice)

>> msg

# b'{"name":"alice","groups":["admin","engineering"],"email":null}'

Decode messages back into Python types (with optional schema validation).

>> msgspec.json.decode(msg, type=User)

# User(name='alice', groups={"admin", "engineering"}, email=None)

>> msgspec.json.decode(b'{"name":"bob","groups":[123]}', type=User)

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

msgspec.ValidationError: Expected `str`, got `int` - at `$.groups[0]`

pydantic¶

The first time I heard about pydantic was when I was listening to a python podcast "Talk Python To Me" hosted by Michael Kennedy. I was super intrigued when the creator of pydantic, Samuel Colvin, talked about what led him to this awesome creation. If you want to listen to all the cool stuff about pydantic generation, check out the podcast as it's totally inspiring and worth your time. I promise! :)

In a nutshell, Pydantic is a data validation and parsing python module that takes advantage of type annotations by enforcing type hints at runtime. It's essentially an abstraction layer like adding those sugar-free whipped frosting on a cake, where it doesn't change the ingredients or logics used, but it will make the cake tastes extra yummy on the exterior. This frosting layer, will handle data parsing and validation features when it's integrated into your codebase.

type annotations¶

Type annotations or type hints that are essentially a defined set of values or functions that gets applied during runtime. These types can be a boolean, integer, or string, or any other type hint that you can think of that is defined during runtime as an expected return value.

Pydantic supports these standard library types including many common used types. I'm not going to go too deep into these topics here, but you can read up on yourself.

Also, for more detail explanations on type hints theory, which I find it pretty cool, check it out here!

install¶

via pip install

via source

pip install git+git://github.com/pydantic/pydantic@main#egg=pydantic

# or with extras that contains email and or file support dependencies

pip install git+git://github.com/pydantic/pydantic@main#egg=pydantic[email,dotenv]